Paolo Amoroso — Rearranging the File Browser menu for Insphex

@2026-03-01 09:16 · 5 days agoInsphex adds the Hexdump item to the File Browser menu to view the hex dump of the selected files. The initial implementation called the public API for adding commands at the top level of the menu.

To later move the item to the See sumbenu that groups various file viewing commands I resorted to list surgery, as the API doesn't support submenus. The problem is internal system details can and do change, which happened to the File Browser menu and led to an Insphex load error.

I fixed the issue by reverting the public API call and now the item is back at the top level of the menu.

Insphex is a hex dump tool similar to the Linux command hexdump. I wrote it in Common Lisp on Medley Interlisp.

#insphex #CommonLisp #Interlisp #Lisp

Discuss... Email | Reply @amoroso@oldbytes.space

Neil Munro — Ningle Tutorial 15: Pagination, Part 2

@2026-02-28 08:00 · 6 days agoContents

- Part 1 (Hello World)

- Part 2 (Basic Templates)

- Part 3 (Introduction to middleware and Static File management)

- Part 4 (Forms)

- Part 5 (Environmental Variables)

- Part 6 (Database Connections)

- Part 7 (Envy Configuation Switching)

- Part 8 (Mounting Middleware)

- Part 9 (Authentication System)

- Part 10 (Email)

- Part 11 (Posting Tweets & Advanced Database Queries)

- Part 12 (Clean Up & Bug Fix)

- Part 13 (Adding Comments)

- Part 14 (Pagination, Part 1)

- Part 15 (Pagination, Part 2)

Introduction

Welcome back! We will be revisiting the pagination from last time, however we are going to try and make this easier on ourselves, I built a package for pagination mito-pager, the idea is that much of what we looked at in the last lesson was very boiler plate and repetitive so we should look at removing this.

I will say, my mito-pager can do a little more than just what I show here, it has two modes, you can use paginate-dao (named this way so that it is familiar to mito) to paginate over simple models, however, if you need to perform complex queries there is a macro with-pager that you can use to paginate. It is this second form we will use in this tutorial.

There is one thing to bear in mind, when using mito-pager, you must implement your data retrieval functions in such a way to return a values object, as mito-pager relies on this to work.

I encourge you to try the library out in other use-cases and, of course, if you have ideas, please let me know.

Changes

Most of our changes are quite limited in scope, really it's just our controllers and models that need most of the edits.

ningle-tutorial-project.asd

We need to add the mito-pager package to our project asd file.

- :ningle-auth)

+ :ningle-auth

+ :mito-pager)

src/controllers.lisp

Here is the real payoff! I almost dreaded writing the sheer volume of the change but then realised it's so simple, we only need to change our index function, and it may be better to delete it all and write our new simplified version.

(defun index (params)

(let* ((user (gethash :user ningle:*session*))

(req-page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(req-limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

(flet ((get-posts (limit offset) (ningle-tutorial-project/models:posts user :offset offset :limit limit)))

(mito-pager:with-pager ((posts pager #'get-posts :page req-page :limit req-limit))

(djula:render-template* "main/index.html" nil :title "Home" :user user :posts posts :pager pager)))))

This is much nicer, and in my opinion, the controller should be this simple.

src/main.lisp

We need to ensure we include the templates from mito-pager, this is a simple one line change.

(defun start (&key (server :woo) (address "127.0.0.1") (port 8000))

(djula:add-template-directory (asdf:system-relative-pathname :ningle-tutorial-project "src/templates/"))

+ (djula:add-template-directory (asdf:system-relative-pathname :mito-pager "src/templates/"))

src/models.lisp

As mentioned at the top of this tutorial, we have to implement our data retrieval functions in a certain way. While there are some changes here, we ultimately end up with less code.

We can start by removing the count parameter, we wont be needing it in this implementation, and since we don't need the count parameter anymore, the :around method can go too!

- (defgeneric posts (user &key offset limit count)

+ (defgeneric posts (user &key offset limit)

-

- (defmethod posts :around (user &key (offset 0) (limit 50) &allow-other-keys)

- (let ((count (mito:count-dao 'post))

- (offset (max 0 offset))

- (limit (max 1 limit)))

- (if (and (> count 0) (>= offset count))

- (let* ((page-count (max 1 (ceiling count limit)))

- (corrected-offset (* (1- page-count) limit)))

- (posts user :offset corrected-offset :limit limit))

- (call-next-method user :offset offset :limit limit :count count))))

There's two methods to look at, the first is when the type of user is user:

-

- (defmethod posts ((user user) &key offset limit count)

+ (defmethod posts ((user user) &key offset limit)

...

(values

- (mito:retrieve-by-sql sql :binds params)

- count

- offset)))

+ (mito:retrieve-by-sql sql :binds params)

+ (mito:count-dao 'post))))

The second is when the type of user is null:

-

- (defmethod posts ((user null) &key offset limit count)

+ (defmethod posts ((user null) &key offset limit)

...

(values

- (mito:retrieve-by-sql sql)

- count

- offset)))

+ (mito:retrieve-by-sql sql)

+ (mito:count-dao 'post))))

As you can see, all we are really doing is relying on mito to do the lions share of the work, right down to the count.

src/templates/main/index.html

The change here is quite simple, all we need to do is to change the path to the partial, we need to simply point to the partial provided by mito-pager.

- {% include "partials/pager.html" with url="/" title="Posts" %}

+ {% include "mito-pager/partials/pager.html" with url="/" title="Posts" %}

src/templates/partials/pagination.html

This one is easy, we can delete it! mito-pager provides its own template, and while you can override it (if you so wish), in this tutorial we do not need it anymore.

Conclusion

I hope you will agree that this time, using a prebuilt package takes a lot of the pain out of pagination. I don't like to dictate what developers should, or shouldn't use, so that's why last time you were given the same information I had, so if you wish to build your own library, you can, or if you want to focus on getting things done, you are more than welcome to use mine, and of course, if you find issues please do let me know!

Learning Outcomes

| Level | Learning Outcome |

|---|---|

| Understand | Understand how third-party pagination libraries like mito-pager abstract boilerplate pagination logic, and how with-pager expects a fetch function returning (values items count) to handle page clamping, offset calculation, and boundary correction automatically. |

| Apply | Apply flet to define a local adapter function that bridges the project's posts generic function with mito-pager's expected (lambda (limit offset) ...) interface, and use with-pager to reduce controller complexity to its essential logic. |

| Analyse | Analyse what responsibilities were transferred from the manual pagination implementation to mito-pager — count caching, boundary checking, offset calculation, page correction, and range generation — contrasting the complexity of both approaches. |

| Create | Refactor a manual pagination implementation to use mito-pager by simplifying model methods to return (values items count), replacing complex multi-step controller calculations with with-pager, and delegating the pagination template partial to the library. |

Github

- The link for the custom pagination part of the tutorials code is available here.

Common Lisp HyperSpec

| Symbol | Type | Why it appears in this lesson | CLHS |

|---|---|---|---|

defpackage |

Macro | Define project packages like ningle-tutorial-project/models, /forms, /controllers. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defpac.htm |

in-package |

Macro | Enter each package before defining models, controllers, and functions. | http://www.lispworks.com/documentation/HyperSpec/Body/m_in_pkg.htm |

defgeneric |

Macro | Define the simplified generic posts function signature with keyword parameters offset and limit (the count parameter is removed). |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defgen.htm |

defmethod |

Macro | Implement the simplified posts methods for user and null types (the :around validation method is removed). |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defmet.htm |

flet |

Special Operator | Define the local get-posts adapter function that wraps posts to match mito-pager's expected (lambda (limit offset) ...) interface. |

http://www.lispworks.com/documentation/HyperSpec/Body/s_flet_.htm |

let* |

Special Operator | Sequentially bind user, req-page, and req-limit in the controller where each value is used in subsequent bindings. |

http://www.lispworks.com/documentation/HyperSpec/Body/s_let_l.htm |

or |

Macro | Provide fallback values when parsing page and limit parameters, defaulting to 1 and 50 respectively. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_or.htm |

multiple-value-bind |

Macro | Capture the SQL string and bind parameters returned by sxql:yield in the model methods. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_multip.htm |

values |

Function | Return two values from posts methods — the list of results and the total count — as required by mito-pager:with-pager. |

http://www.lispworks.com/documentation/HyperSpec/Body/a_values.htm |

parse-integer |

Function | Convert string query parameters ("1", "50") to integers, with :junk-allowed t for safe parsing. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_parse_.htm |

Joe Marshall — binary-compose-left and binary-compose-right

@2026-02-16 21:35 · 18 days agoIf you have a unary function F, you can compose it with function G, H = F ∘ G, which means H(x) = F(G(x)). Instead of running x through F directly, you run it through G first and then run the output of G through F.

If F is a binary function, then you either compose it with a unary function G on the left input: H = F ∘left G, which means H(x, y) = F(G(x), y) or you compose it with a unary function G on the right input: H = F ∘right G, which means H(x, y) = F(x, G(y)).

(binary-compose-left f g) = (λ (x y) (f (g x) y)) (binary-compose-right f g) = (λ (x y) (f x (g y)))

We could extend this to trinary functions and beyond, but it is less common to want to compose functions with more than two inputs.

binary-compose-right comes in handy when combined

with fold-left. This identity holds

(fold-left (binary-compose-right f g) acc lst) <=> (fold-left f acc (map g lst))

but the right-hand side is less efficient because it requires an extra pass through the list to map g over it before folding. The left-hand side is more efficient because it composes g with f on the fly as it folds, so it only requires one pass through the list.

vindarel — 🖌️ Lisp screenshots: today's Common Lisp applications in action

@2026-02-11 22:35 · 23 days agoI released a hopefully inspiring gallery:

lisp-screenshots.org

We divide the showcase under the categories Music, Games, Graphics and CAD, Science and industry, Web applications, Editors and Utilities.

Of course:

“Please don’t assume Lisp is only useful for...

thank you ;)

For more example of companies using CL in production, see this list (contributions welcome, of course).

Don’t hesitate to share a screenshot of your app! It can be closed source and yourself as the sole user, as long as it as some sort of a GUI, and you use it. Historical success stories are for another collection.

The criteria are:

- built in Common Lisp

- with some sort of a graphical interface

- targeted at end users

- a clear reference, anywhere on the web, by email to me or simply as a comment here, that it is built in CL.

Details:

- it can be web applications whose server side is CL, even if the JS/HTML is classical web tech.

- no CLI interfaces. A readline app is OK but hey, we can do better.

- it can be closed-source or open-source, commercial, research or a personal software

- regarding “end users”: I don’t see how to include a tool like CEPL, but I did include a screen of LispWorks.

- bonus point if it is developed in a company (we want it on https://github.com/azzamsa/awesome-lisp-companies/), be it a commercial product or an internal tool.

You can reach us on GitHub discussions, by email at (reverse "gro.zliam@stohsneercs+leradniv") and in the comments.

Best,

Joe Marshall — Vibe Coded Scheme Interpreter

@2026-02-07 00:28 · 27 days agoMark Friedman just released his Scheme-JS interpreter which is a Scheme with transparent JavaScript interoperability. See his blog post at furious ideas.

This interpreter apparently uses the techniques of lightweight stack inspection — Mark consulted me a bit about that hack works. I'm looking forward to seeing the vibe coded architecture.

Gábor Melis — Untangling Literate Programming

@2026-02-02 00:00 · 33 days agoClassical literate programming

A literate program intersperses narrative and code chunks. From this, source code to be fed to the compiler is generated by a process called tangling, and documentation by weaving. The specifics of tangling vary, but by allowing complete reordering and textual combination of chunks, it lets the human narrative drive the exposition at the cost of introducing an additional step into the write-compile-run cycle.

The general idea

It is easy to mistake this classical implementation of literate programming for the more general idea that we want to

present code to human readers in pedagogical order with narrative added, and

make changing code and its documentation together easy.

The advantages of literate programming follow from these desiderata.

Untangled LP

In many languages today, code order is far more flexible than in the era of early literate programming, so the narrative order can be approximated to some degree using docstrings and comments. Code and its documentation are side by side, so changing them together should also be easy. Since the normal source code now acts as the LP source, there is no more tangling in the programming loop. This is explored in more detail here.

Pros and cons

Having no tangling is a great benefit, as we get to keep our usual programming environment and tooling. On the other hand, bare-bones untangled LP faces the following potential problems.

Order mismatch: Things like inline functions and global variables may need to be defined before use. So, code order tends to deviate from narrative order to some degree.

Reduced locality: Our main tool to sync code and narrative is factoring out small, meaningful functions, which is just good programming style anyway. However, this may be undesirable for reasons of performance or readability. In such a case, we might end up with a larger function. Now, if we have only a single docstring for it, then it can be non-obvious which part of the code a sentence in the docstring refers to because of their distance and the presence of other parts.

No source code only view: Sometimes we want to see only the code. In classical LP, we can look at the tangled file. In untangled LP, editor support for hiding the narrative is the obvious solution.

No generated documentation: There is no more tangling nor weaving, but we still need another tool to generate documentation. Crucially, generating documentation is not in the main programming loop.

In general, whether classical or untangled LP is better depends on the severity of the above issues in the particular programming environment.

The Lisp and PAX view

MGL-PAX, a Common Lisp untangled LP solution, aims to minimize the above problems and fill in the gaps left by dropping tangling.

Order

Common Lisp is quite relaxed about the order of function definitions, but not so much about

DEFMACRO,DEFVAR,DEFPARAMETER,DEFCONSTANT,DEFTYPE,DEFCLASS,DEFSTRUCT,DEFINE-COMPILER-MACRO,SET-MACRO-CHARACTER,SET-DISPATCH-MACRO-CHARACTER,DEFPACKAGE. However, code order can for the most part follow narrative order. In practice, we end up with someDEFVARs far from their parentDEFSECTIONs (butDECLAIMSPECIALhelps).DEFSECTIONcontrols documentation order. The references to Lisp definitions inDEFSECTIONdetermine narrative order independently from the code order. This allows the few ordering problems to be patched over in the generated documentation.Furthermore, because

DEFSECTIONcan handle the exporting of symbols, we can declare the public interface piecemeal, right next to the relevant definitions, rather than in a monolithicDEFPACKAGE

Locality

Lisp macros replace chunks in the rare, complex cases where a chunk is not a straightforward text substitution but takes parameters. Unlike text-based LP chunks, macros must operate on valid syntax trees (S-expressions), so they cannot be used to inject arbitrary text fragments (e.g. an unclosed parenthesis).

This constraint forces us to organize code into meaningful, syntactic units rather than arbitrary textual fragments, which results in more robust code. Within these units, macros allow us to reshape the syntax tree directly, handling scoping properly where text interpolation would fail.

PAX's

NOTEis an extractable, named comment.NOTEcan interleave with code within e.g. functions to minimize the distance between the logic and its documentation.Also, PAX hooks into the development to provide easy navigation in the documentation tree.

Source code only view: PAX supports hiding verbose documentation (sections, docstrings, comments) in the editor.

Generating documentation

PAX extracts docstrings,

NOTEs and combines them with narrative glue inDEFSECTIONs.Documentation can be generated as static HTML/PDF files for offline reading or browsed live (in an Emacs buffer or via an in-built web server) during development.

LaTeX math is supported in both PDF and HTML (via MathJax, whether live or offline).

Joe Marshall — Some Libraries

@2026-02-01 23:15 · 33 days agoZach Beane has released the latest Quicklisp beta (January 2026), and I am pleased to have contributed to this release. Here are the highlights:

dual-numbers— Implements dual numbers and automatic differentiation using dual numbers for Common Lisp.fold— FOLD-LEFT and FOLD-RIGHT functions.function— Provides higher-order functions for composition, currying, partial application, and other functional operations.generic-arithmetic— Defines replacement generic arithmetic functions with CLOS generic functions making it easier to extend the Common Lisp numeric tower to user defined numeric types.named-let— Overloads the LET macro to provide named let functionality similar to that found in Scheme.

Selected Functions

Dual numbers

Returns a new unary function that computes the exact derivative of the given

function at any point x.

The returned function utilizes Dual Number arithmetic to perform

automatic differentiation. It evaluates f(x + ε), where

ε is the dual unit (an infinitesimal such that ε2 = 0).

The result is extracted from the infinitesimal part of the computation.

This method avoids the precision errors of numerical approximation (finite difference)

and the complexity of symbolic differentiation. It works for any function composed

of standard arithmetic operations and elementary functions supported by the

dual-numbers library (e.g., sin, exp, log).

Example

(defun square (x) (* x x))

(let ((df (derivative #'square)))

(funcall df 5))

;; => 10

Implementation Note

The implementation relies on the generic-arithmetic system to ensure that

mathematical operations within function can accept and return

dual-number instances seamlessly.

Function

Composes a binary function B(x, y) with a unary function U(z)

applied to one of its arguments.

These combinators are essential for "lifting" unary operations into binary contexts, such as when folding a sequence where elements need preprocessing before aggregation.

Example

;; Summing the squares of a list

(fold-left (binary-compose-right #'+ #'square) 0 '(1 2 3))

;; => 14 ; (+ (+ (+ 0 (sq 1)) (sq 2)) (sq 3))

FOLD

Iterates over sequence, calling function with the

current accumulator and the next element. The accumulator is initialized to

initial-value.

This is a left-associative reduction. The function is applied as:

Unlike CL:REDUCE, the argument order for function is strictly defined:

the first argument is always the accumulator, and the second

argument is always the element from the sequence. This explicit ordering eliminates ambiguity

and aligns with the functional programming convention found in Scheme and ML.

Arguments

function: A binary function taking (accumulator, element).initial-value: The starting value of the accumulator.sequence: A list or vector to traverse.

Example

(fold-left (lambda (acc x) (cons x acc))

nil

'(1 2 3))

;; => (3 2 1) ; Effectively reverses the list

Named Let

Provides the functionality of the "Named Let" construct, commonly found in Scheme.

This allows for the definition of recursive loops within a local scope without

the verbosity of LABELS.

The macro binds the variables defined in bindings as in a standard let,

but also binds name to a local function that can be called recursively

with new values for those variables.

This effectively turns recursion into a concise, iterative structure. It is the

idiomatic functional alternative to imperative loop constructs.

While commonly used for tail recursive loops, the function bound by named let is a first-class procedure that can be called anywhere or used as a value.

Example

;; Standard Countdown Loop

(let recur ((n 10))

(if (zerop n)

'blastoff

(progn

(print n)

(recur (1- n)))))

Implementation Note

The named-let library overloads the standard CL:LET

macro to support this syntax directly if the first argument is a symbol. This allows

users to use let uniformly for both simple bindings and recursive loops.

Neil Munro — Ningle Tutorial 14: Pagination, Part 1

@2026-01-31 08:00 · 34 days agoContents

- Part 1 (Hello World)

- Part 2 (Basic Templates)

- Part 3 (Introduction to middleware and Static File management)

- Part 4 (Forms)

- Part 5 (Environmental Variables)

- Part 6 (Database Connections)

- Part 7 (Envy Configuation Switching)

- Part 8 (Mounting Middleware)

- Part 9 (Authentication System)

- Part 10 (Email)

- Part 11 (Posting Tweets & Advanced Database Queries)

- Part 12 (Clean Up & Bug Fix)

- Part 13 (Adding Comments)

- Part 14 (Pagination, Part 1)

- Part 15 (Pagination, Part 2)

Introduction

Hello and welcome back, I hope you all had a good festive season, I took a break last month as I usually get very busy in December, but lest you think I had stopped posting, I have prepared a two part lesson this time: Pagination. We are first going to look at rolling your own pagination, but we will then look at integrating a package I wrote ningle-pager, to simplify the code. This way if my package doesn't fit your needs, you have the information required to build your own solution.

In practical terms, something like a microblogging app would use infinite scrolling, but we don't have anywhere enough data to present that as a lesson right now, and besides pagination has a lot of uses, Google and Amazon use it for their products, so it must be pretty useful!

Theory

In SQL, there is the ability to LIMIT results, but also, the ability to start from an OFFSET, which ultimately does the heavy lifting for us. We have previously looked at SXQL, which is a thin layer upon SQL, so we can use (limit 50) and (offset 100) (or whatever values we want) to interact with the database, we will also use GET parameters like ?page=2&limit=50 (or something). So with this information we know the url patterns and we know what SXQL forms we want to use, we just have to design how our application will work internally.

Our solution will define an interface, any controller that needs to be paginated will:

- Accept a

pagekeyword parameter - Accept a

limitkeyword parameter - Return a

valueslist that has 3 items, the results, the total count, and the offset.

The work will touch the models, the controllers, and the templates:

Models

We are gonna get deep into the weeds with clos in how we implement our pagination in this part, there's multiple methods so we will take each implementation one by one. You can learn more about how OOP is implemented in my older videos.

generic Method

We start with a generic definition, we already had one, but we are modifying it. Fun fact, the generic method defines all the parameters a method might use, but not all methods must use the arguments, which comes in real handy for us later:

(defgeneric posts (user &key offset limit count)

(:documentation "Gets the posts"))

Here we have a generic method, generic methods do nothing on their own, they help us define what a method should do, but of course under certain circumstances how a method does what it does may need to change, this allows us to implment different specialised concrete methods, we will look at this below.

What we have done with this generic method is add key arguments offset, limit, and count, as we saw previously, all this does is declare a :documentation form.

:around method

As you may, or may not know, the Common Lisp Object System (clos for short) allows us to define, as we have done previously primary methods, these are methods that specialise on one (or more) of the parameters. When passed arguments at the point the method is called, the method matching the parameter type of the arguments passed will trigger. That is why our posts method specifies user to be a user object, or null and handles the logic in different ways. It also allows us to define auxiliary methods, which are known as :before, :after, and :around. The :before methods will run, well, before the related primary method is called, with each :before method being called by its most specific signature to its least. :after methods are the total opposite, they run after a primary method is run, and they run from the least specific version to the most specific. They would be where we might want to add signals, or logging, we could have a :before, and :after around the mito:save-dao that we use and the :before method sends a pre-save signal while the :after sends a post-save signal.

It is not, however the :before/:after methods we care about here, we in fact will write an :around, which is a more fundamental building block. :around methods control, how, when, or even if, a primary method gets called, the other methods can't control this. As previously discussed they have a specific order in which they run, so if we wanted to... say... capture arguments and do some processing on them because, I dunno, we should never trust user input, prior to running our primary method, an :around method is what we would need to use.

The real "magic" of how to do what we want to do is use an :around method. We will look at the complete implementation a little bit later, but we need to pause and ensure we really understand about method combination in Common Lisp.

As we mentioned in the defgeneric, not every method needs to use or specialise on every parameter, and in this :around method you will notice that the count is absent, that is by design, because the :around method will compute it and pass it onto the next method in the chain, instead it uses &allow-other-keys to allow these key arguments to be accepted, but also since they are unnamed, the compiler won't emit a warning that they're not used.

Our implementation is here:

(defmethod posts :around (user &key (offset 0) (limit 50) &allow-other-keys)

(let ((count (mito:count-dao 'post))

(offset (max 0 offset))

(limit (max 1 limit)))

(if (and (> count 0) (>= offset count))

(let* ((page-count (max 1 (ceiling count limit)))

(corrected-offset (* (1- page-count) limit)))

(posts user :offset corrected-offset :limit limit))

(call-next-method user :offset offset :limit limit :count count))))

The first thing to note is the obvious :around keyword that comes after the posts name, this is how we declare a method as an :around method. The next thing to notice is that the count parameter is not declared, instead we use the &allow-other-keys, as discussed above. This method will modify some variables or recalculate the offset if it was invalid before either calling itself (to perform the recalculations) or call the next method with, well, call-next-method.

We begin with a let form that will get the number of items by using mito:count-dao, we determine the offset by getting the max of 0 or the offset, we also define limit as the max of 1 and limit.

The key check here is in the if form, which checks that both the count is larger than zero (> count zero) and the offset is bigger than the count (>= offset count), this tells us that an invalid condition exists, we can't request an offset to be larger than the number of items, so we have to handle it. Under these circumstances we need to get the new page-count by calculating (max 1 (ceiling count limit)), this will round up the result of dividing count by limit, and returns that, or 1.

Once we have that we can calculate a corrected offset by using the formula (* (1- page-count) limit), to run through how this formula works, here are some examples, if we assume limit is defined as 50, we can increment the page-count by one each time to see how this calculation works:

- Page 1:

(* (1- 1) 50)->(* 0 50)-> 0 - Page 2:

(* (1- 2) 50)->(* 1 50)-> 50 - Page 3:

(* (1- 3) 50)->(* 2 50)-> 100

With this calculation done we can recursively call the method again, this time with the correct values, which brings us to our base case, calling the next method via call-next-method with the appropriate values which handily brings us to the specific methods now. We can actually dramatically simplify our primary methods thanks to the :around method.

Something to bear in mind, our count here is real easy, cos we are just returning all posts, but a more complex application may need more complex logic to determine what and how you are counting.

user method

Since we don't need to handle any error state or recovery (because the :around method handles it), we can actually write simple methods that perform a query and return the results. We have also simplified the way in which we run queries, turns out the sxql:yield returns multiple values, the first is the SQL string, the second is a list of params to be spliced into it (to avoid sql injection attacks), so we set up a multiple-value-bind form to capture these, and we put our SQL together, we previously used :? which was fine, as that is the character used to be a place holder, but this way is nicer to write. The things you learn, eh?

Please note however, where in our :around method we didn't specify the count parameter that the generic method defines, in this primary method, we do!

All we do it use a values form to return the result of running the sql, with the parameters bound to it, the count (number of items total) and the offset from where it starts returning results from.

(defmethod posts ((user user) &key offset limit count)

(multiple-value-bind (sql params)

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count)

(:as (:count :user_likes.id) :liked_by_user))

(sxql:from :post)

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:left-join (:as :likes :user_likes)

:on (:and (:= :post.id :user_likes.post_id)

(:= :user_likes.user_id (mito:object-id user))))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:offset offset)

(sxql:limit limit)))

(values

(mito:retrieve-by-sql sql :binds params)

count

offset)))

This makes our primary method much tighter, it runs a query and returns results, the :around method handles the recalculation logic (which is shared between this primary method and the next). Nice and simple.

null method

So having seen the form of our new primary methods above, we follow the same patern for the primary method where the user is null. As before this primary method accepts the count parameter.

(defmethod posts ((user null) &key offset limit count)

(multiple-value-bind (sql)

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count))

(sxql:from :post)

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:limit limit)

(sxql:offset offset)))

(values

(mito:retrieve-by-sql sql)

count

offset)))

The query is simpler, and we do not need to actually pass any variables into the SQL string, so we don't need the params value returned from the multiple-value-bind, which means we also don't need to use the :binds key argument into mito:retrieve-by-sql.

And that's it, that's our models done!

Controllers

Our controller will be the index controller we built previously, but we need to modify it quite a bit to parse and process the information we need, pagination has a lot of data, and we will need to ensure our templates can present the UI and data in a easy to use manner.

The controller will be so radically different as to be entirely new, it may be easier for you to delete the existing index controller and replace it with what we write here.

The first thing the controller needs to do is grab the GET parameters and validate them, we follow a basic formula to achieve this for the two parameters we need (page, and limit):

(or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1)

(or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)

As you can see these are basically identical the only thing that differs are the default values, in the case of page it is "1"/1 for limit it is "50"/50. To run through the logic we have some basic possibilities we need to handle.

In the case where there is no parameter which will be the case if no page=x is in url, or the value of page is not numeric (such as a word, page=hi or something) the result of (ingle:get-param "page" params) will be nil.

In the case where page is provided and is a number, the process is the same, but (ingle:get-param "page" params) would return a number as a string.

We can see how that would evaluate here:

(or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1)

(or (parse-integer (or nil "1") :junk-allowed t) 1)

(or (parse-integer "1" :junk-allowed t) 1)

(or 1 1)

1

The process repeats for the "limit" parameter. It's a lot of checking and handling, it would be nice if there were a library to handle this for us, but I have not yet found one, perhaps that will be our next topic!

NOTE! In this example we are permitting arbitrary limit values (we are learning), in practice, this should be limited to a maximum value to prevent users from requesting a page that may result in a Denial Of Service type event. What the exact value should be really depends on the data, it might be fine to get thousands of numbers in one go, but if your models are complicated, a smaller number may be better.

You could do something like this to limit... the limit: (limit (min 100 (max 1 limit)))

The let binding will therefore look like this

(let ((user (gethash :user ningle:*session*))

(page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

...)

With those parameters validated, we can focus on building our paginated controller. Thanks to the work we did in the models we can pull the values out of the posts method with multiple-value-bind:

(let ((user (gethash :user ningle:*session*))

(page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

(multiple-value-bind (posts count offset) (ningle-tutorial-project/models:posts user :offset (* (1- page) limit) :limit limit)

...))

This enables us to now calculate the various values we need to pass through into a template to render the paginator, we need to generate 6 values.

page

The page variable is a way to determine what the current page is, it is calculated like so:

(1+ (floor offset limit))

From the offset we get from the multiple-value-bind we round down the value of dividing offset by the limit and add 1 to the value. If we assume, for example, an offset of 50 and a limit of 50, we can see how the page is determined.

(1+ (floor 50 50))

(1+ 1)

2

If we want to see something larger:

(1+ (floor 250 50))

(1+ 5)

6

page count

The page-count variable is a way to determine the total number of pages:

(max 1 (ceiling count limit))

Again, from the multiple-value-bind we get the count object, so we can expand this, assuing count is 250 and limit is 50.

(max 1 (ceiling 500 50))

(max 1 10)

10

In this manner, given a total number and a page size we want to split it into, we can see the total number of pages.

previous page number

Unlike the previous two calculations, prev-page can legitiately be nil. In the case we are already on the first page there's no way for there to be a previous page, so nil is fine. If we need to have some binary conditional logic where nil is acceptable when is our friend.

(when (> page 1) (1- page))

Wwhen the page is bigger than one, return one less than the value of page, because this is a when (1- page) will be returned, or nil will be.

page number

The inverse of the above:

(when (< page page-count) (1+ page))

When the page is smaller than the total number of pages, return one more than the value of page, or nil.

range start

Range start is to help the UI, typically in paginators, especially in large ones, there's a first, last, and current location, but often the current location has some pages to the left and right, this is the range. Now there's no real right number for the ranges, but I settled on 2.

(max 1 (- page 2))

Assuming page is 1, max will return 1, but if we are on, say, page 15, the location the range starts at is 13.

range end

Range end behaves like range start, except in the other direction, but we need to ensure we get the minimum of the page-count, in case we are on the last page.

(min page-count (+ page 2))

With these defined we can put them in a let* form.

(let ((user (gethash :user ningle:*session*))

(page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

(multiple-value-bind (posts count offset) (ningle-tutorial-project/models:posts user :offset (* (1- page) limit) :limit limit)

(let* ((page (1+ (floor offset limit)))

(page-count (max 1 (ceiling count limit)))

(prev-page (when (> page 1) (1- page)))

(next-page (when (< page page-count) (1+ page)))

(range-start (max 1 (- page 2)))

(range-end (min page-count (+ page 2))))

...)))

The final thing we need to do is return the result of djula:render-template*, but there is still more data we need to pass through, build upon the variables we defined, there's only 5 more.

pages

Pages is simply a list of all the pages, which is easy enough to generate:

(loop :for idx :from range-start :to range-end :collect idx)

show-start-gap

The show-start-gap is a boolean that tells the template to render part of the paginator UI.

(> range-start 2)

This will return t or nil depending on if range-start is larger than 2.

show-end-gap

The show-end-gap is the inverse:

(< range-end (1- page-count))

This will return t or nil depending on if range-end is smaller than (1- page-count).

start-index

To get the start-index, this is the number starting from the offset so we can display something like "Showing x - y of z", x would be our start-index.

(if (> count 0) (1+ offset) 0)

If the count is bigger than zero then we return one more than the offset, else we return 0 (the default starting offset being 0).

end-index

Again, this is the opposite of another thing, the start-index.

(if (> count 0) (min count (+ offset (length posts))) 0)

If count is bigger than zero then what we need is the smallest (min) of the count and offset plus the number of posts, or 0. It's possible there isn't a complete pages worth of items, so we need to ensure that we don't over run.

With all that being said, we can now see the complete controller with the values rendered by djula:

(defun index (params)

(let ((user (gethash :user ningle:*session*))

(page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

(multiple-value-bind (posts count offset) (ningle-tutorial-project/models:posts user :offset (* (1- page) limit) :limit limit)

(let* ((page (1+ (floor offset limit)))

(page-count (max 1 (ceiling count limit)))

(prev-page (when (> page 1) (1- page)))

(next-page (when (< page page-count) (1+ page)))

(range-start (max 1 (- page 2)))

(range-end (min page-count (+ page 2))))

(djula:render-template*

"main/index.html"

nil

:title "Home"

:user user

:posts posts

:form (if user (cl-forms:find-form 'post) nil)

:count count

:page page

:limit limit

:page-count page-count

:prev-page prev-page

:next-page next-page

:pages (loop :for idx :from range-start :to range-end :collect idx)

:show-start-gap (> range-start 2)

:show-end-gap (< range-end (1- page-count))

:start-index (if (> count 0) (1+ offset) 0)

:end-index (if (> count 0) (min count (+ offset (length posts))) 0))))))

I would have thought that having an invalid number would have triggered a 404, or perhaps a 400, but having tested this with Google, it seems that the convention is to default to page 1. So with that said and the controller in place, we can now write our templates.

Templates

index (src/templates/main/index.html)

Our index template doesn't require much change at all, we need to only add an include (from djula) to include the contents of one template inside another. Of course we have still to write the pagination template, but that is just below.

{% extends "base.html" %}

{% block content %}

<div class="container">

<!-- Post form -->

<div class="row mb-4">

<div class="col">

{% if form %}

{% form form %}

{% endif %}

</div>

</div>

<!-- Posts Section -->

+ {% include "partials/pagination.html" with url="/" title="Posts" %}

<div class="row">

...

</div>

+ {% include "partials/pagination.html" with url="/" title="Posts" %}

</div>

{% endblock %}

Something to bear in mind here is the way this is designed is that if you need to pass in some data, in our case url, and title, we can pass through these things, we will use these in the pagination html partial.

pagination (src/templates/partials/pagination.html)

Partials are a way to include reusable parts of html presentation in a template, they help us build isolated pieces of presentation logic that we might want to use over and over again all over our application, this is why we save them in a partials folder, because they are a partial piece of presentation logic.

This is the magic that makes the UI work, while we showed were it would be used in the index.html page, we need to look into what it does. I do use bootstrap to make things look nice, but I'm very much NOT a frontend engineer, so I can't speak to how to make something look good without it, so inevitably much of the classes and UI come from Bootstrap.

I will have to break the html down piece by piece to explain what it's all doing, but look at the final listing to see the complete file.

From the values we calculated though, we start by checking if the page count is bigger than 1, because if we have less than two pages, we can't paginate, therefore the whole UI is wrapped in:

{% if page-count > 1%}

...

{% endif %}

With that we can use the start-index, end-index, and count, to display the human readable part of the paginator.

{% if page-count > 1%}

<div class="table-pagination">

<div class="pagination-summary">

Showing {{ start-index }}-{{ end-index }} of {{ count }}

</div>

...

{% endif %}

We then setup a nav, with a single ul object in it, with which we define our parts of the paginator as li tags.

{% if page-count > 1%}

...

<nav aria-label="{{ title }} pagination">

<ul class="pagination">

...

{% endif %}

Within this ul, we have to put all of our li elements which will contain the aspects of the UI. The first such item is:

...

<ul class="pagination">

<li class="page-item{% if not prev-page %} disabled{% endif %}">

{% if prev-page %}

<a class="page-link" href="{{ url }}?page={{ prev-page }}&limit={{ limit }}">Prev</a>

{% else %}

<span class="page-link">Prev</span>

{% endif %}

</li>

...

</ul>

This first li will set the disabled css class if the prev-page is not nil. It will again rely on prev-page to either render an a tag building the url up, including the prev-page, and limit, else a span is rendered. This sets up the first element in the pagination UI.

The second li item checks the page, and if it is the first page, it sets the active class and renders a span, if it is NOT 1 then a link to the first page is rendered with a a tag, building up the url as we did before.

...

<li class="page-item{% if page == 1 %} active{% endif %}">

{% if page == 1 %}

<span class="page-link">1</span>

{% else %}

<a class="page-link" href="{{ url }}?page=1&limit={{ limit }}">1</a>

{% endif %}

</li>

...

Now that we have gotten the beginning of the paginator with a "Prev" li element and the first li element, we might need to render an elipsis (...) if the number of our pages is too large. We will repeat this pattern later on, in reverse, we will use the show-start-gap boolean to render the ....

...

{% if show-start-gap %}

<li class="page-item disabled"><span class="page-link">...</span></li>

{% endif %}

...

With that done, we can now render the page numbers:

{% for p in pages %}

{% if p != 1 and p != page-count %}

<li class="page-item{% if p == page %} active{% endif %}">

{% if p == page %}

<span class="page-link">{{ p }}</span>

{% else %}

<a class="page-link" href="{{ url }}?page={{ p }}&limit={{ limit }}">{{ p }}</a>

{% endif %}

</li>

{% endif %}

{% endfor %}

We loop over the list of page numbers we passed into the template as pages, if the loop iteration is NOT the first page (remember that this is a list of page numbers and starts from 1, not 0) and the loop iteration is not the current page, then we will render the li tag. If we just so happen to be on the loop iteration that is the current page (page), we render a span tag and not a link, else we render a link so that we can directly navigate to this element in the paginator.

We then render the show-end-gap, using the pattern we used above:

...

{% if show-end-gap %}

<li class="page-item disabled"><span class="page-link">...</span></li>

{% endif %}

...

This will render an elipsis (...) where needed.

Now to the final page in the paginator, we must check if we are on the final page, which, as we have seen before, we do in the class line, and to determine if we render a span tag if we are on the final page, or a a tag if we are not.

...

<li class="page-item{% if page == page-count %} active{% endif %}">

{% if page == page-count %}

<span class="page-link">{{ page-count }}</span>

{% else %}

<a class="page-link" href="{{ url }}?page={{ page-count }}&limit={{ limit }}">{{ page-count }}</a>

{% endif %}

</li>

...

And finally, we must render the "Next" part of the pagination:

...

<li class="page-item{% if not next-page %} disabled{% endif %}">

{% if next-page %}

<a class="page-link" href="{{ url }}?page={{ next-page }}&limit={{ limit }}">Next</a>

{% else %}

<span class="page-link">Next</span>

{% endif %}

</li>

...

If there is NOT a next page we add the disabled class, we then, as we have seen before use the next-page variable to determine if we render an a tag, or a span tag.

Full Listings

To see how all of this comes together here are the files in their entirety.

models.lisp

(defpackage ningle-tutorial-project/models

(:use :cl :mito :sxql)

(:import-from :ningle-auth/models #:user)

(:export #:post

#:id

#:content

#:comments

#:likes

#:user

#:liked-post-p

#:posts

#:parent

#:toggle-like))

(in-package ningle-tutorial-project/models)

(deftable post ()

((user :col-type ningle-auth/models:user :initarg :user :accessor user)

(parent :col-type (or :post :null) :initarg :parent :reader parent :initform nil)

(content :col-type (:varchar 140) :initarg :content :accessor content)))

(deftable likes ()

((user :col-type ningle-auth/models:user :initarg :user :reader user)

(post :col-type post :initarg :post :reader post))

(:unique-keys (user post)))

(defgeneric likes (post)

(:documentation "Returns the number of likes a post has"))

(defmethod likes ((post post))

(mito:count-dao 'likes :post post))

(defgeneric comments (post user)

(:documentation "Gets the comments for a logged in user"))

(defmethod comments ((post post) (user user))

(mito:retrieve-by-sql

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count)

(:as (:count :user_likes.id) :liked_by_user))

(sxql:from :post)

(sxql:where (:= :parent :?))

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:left-join (:as :likes :user_likes)

:on (:and (:= :post.id :user_likes.post_id)

(:= :user_likes.user_id :?)))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:limit 50)))

:binds (list (mito:object-id post) (mito:object-id user))))

(defmethod comments ((post post) (user null))

(mito:retrieve-by-sql

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count))

(sxql:from :post)

(sxql:where (:= :parent :?))

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:limit 50)))

:binds (list (mito:object-id post))))

(defgeneric toggle-like (user post)

(:documentation "Toggles the like of a user to a given post"))

(defmethod toggle-like ((ningle-auth/models:user user) (post post))

(let ((liked-post (liked-post-p user post)))

(if liked-post

(mito:delete-dao liked-post)

(mito:create-dao 'likes :post post :user user))

(not liked-post)))

(defgeneric liked-post-p (user post)

(:documentation "Returns true if a user likes a given post"))

(defmethod liked-post-p ((ningle-auth/models:user user) (post post))

(mito:find-dao 'likes :user user :post post))

(defgeneric posts (user &key offset limit count)

(:documentation "Gets the posts"))

(defmethod posts :around (user &key (offset 0) (limit 50) &allow-other-keys)

(let ((count (mito:count-dao 'post))

(offset (max 0 offset))

(limit (max 1 limit)))

(if (and (> count 0) (>= offset count))

(let* ((page-count (max 1 (ceiling count limit)))

(corrected-offset (* (1- page-count) limit)))

(posts user :offset corrected-offset :limit limit))

(call-next-method user :offset offset :limit limit :count count))))

(defmethod posts ((user user) &key offset limit count)

(multiple-value-bind (sql params)

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count)

(:as (:count :user_likes.id) :liked_by_user))

(sxql:from :post)

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:left-join (:as :likes :user_likes)

:on (:and (:= :post.id :user_likes.post_id)

(:= :user_likes.user_id (mito:object-id user))))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:offset offset)

(sxql:limit limit)))

(values

(mito:retrieve-by-sql sql :binds params)

count

offset)))

(defmethod posts ((user null) &key offset limit count)

(multiple-value-bind (sql)

(sxql:yield

(sxql:select

(:post.*

(:as :user.username :username)

(:as (:count :likes.id) :like_count))

(sxql:from :post)

(sxql:left-join :user :on (:= :post.user_id :user.id))

(sxql:left-join :likes :on (:= :post.id :likes.post_id))

(sxql:group-by :post.id)

(sxql:order-by (:desc :post.created_at))

(sxql:limit limit)

(sxql:offset offset)))

(values

(mito:retrieve-by-sql sql)

count

offset)))

controllers.lisp

(defpackage ningle-tutorial-project/controllers

(:use :cl :sxql)

(:import-from :ningle-tutorial-project/forms

#:post

#:content

#:parent

#:comment)

(:export #:index

#:post-likes

#:single-post

#:post-content

#:post-comment

#:logged-in-profile

#:unauthorized-profile

#:people

#:person))

(in-package ningle-tutorial-project/controllers)

(defun index (params)

(let ((user (gethash :user ningle:*session*))

(page (or (parse-integer (or (ingle:get-param "page" params) "1") :junk-allowed t) 1))

(limit (or (parse-integer (or (ingle:get-param "limit" params) "50") :junk-allowed t) 50)))

(multiple-value-bind (posts count offset) (ningle-tutorial-project/models:posts user :offset (* (1- page) limit) :limit limit)

(let* ((page (1+ (floor offset limit)))

(page-count (max 1 (ceiling count limit)))

(prev-page (when (> page 1) (1- page)))

(next-page (when (< page page-count) (1+ page)))

(range-start (max 1 (- page 2)))

(range-end (min page-count (+ page 2))))

(djula:render-template*

"main/index.html"

nil

:title "Home"

:user user

:posts posts

:form (if user (cl-forms:find-form 'post) nil)

:count count

:page page

:limit limit

:page-count page-count

:prev-page prev-page

:next-page next-page

:pages (loop :for idx :from range-start :to range-end :collect idx)

:show-start-gap (> range-start 2)

:show-end-gap (< range-end (1- page-count))

:start-index (if (> count 0) (1+ offset) 0)

:end-index (if (> count 0) (min count (+ offset (length posts))) 0))))))

(defun post-likes (params)

(let* ((user (gethash :user ningle:*session*))

(post (mito:find-dao 'ningle-tutorial-project/models:post :id (parse-integer (ingle:get-param :id params))))

(res (make-hash-table :test 'equal)))

;; Bail out if post does not exist

(unless post

(setf (getf (lack.response:response-headers ningle:*response*) :content-type) "application/json")

(setf (gethash "error" res) "post not found")

(setf (lack.response:response-status ningle:*response*) 404)

(return-from post-likes (com.inuoe.jzon.stringify res)))

;; success, continue

(setf (gethash "post" res) (mito:object-id post))

(setf (gethash "liked" res) (ningle-tutorial-project/models:toggle-like user post))

(setf (gethash "likes" res) (ningle-tutorial-project/models:likes post))

(setf (getf (lack.response:response-headers ningle:*response*) :content-type) "application/json")

(setf (lack.response:response-status ningle:*response*) 201)

(com.inuoe.jzon:stringify res)))

(defun single-post (params)

(handler-case

(let ((post (mito:find-dao 'ningle-tutorial-project/models:post :id (parse-integer (ingle:get-param :id params))))

(form (cl-forms:find-form 'comment)))

(cl-forms:set-field-value form 'ningle-tutorial-project/forms:parent (mito:object-id post))

(djula:render-template* "main/post.html" nil

:title "Post"

:post post

:comments (ningle-tutorial-project/models:comments post (gethash :user ningle:*session*))

:likes (ningle-tutorial-project/models:likes post)

:form form

:user (gethash :user ningle:*session*)))

(parse-error (err)

(setf (lack.response:response-status ningle:*response*) 404)

(djula:render-template* "error.html" nil :title "Error" :error err))))

(defun post-content (params)

(let ((user (gethash :user ningle:*session*))

(form (cl-forms:find-form 'post)))

(handler-case

(progn

(cl-forms:handle-request form) ; Can throw an error if CSRF fails

(multiple-value-bind (valid errors)

(cl-forms:validate-form form)

(when errors

(format t "Errors: ~A~%" errors))

(when valid

(cl-forms:with-form-field-values (content) form

(mito:create-dao 'ningle-tutorial-project/models:post :content content :user user :parent nil)

(ingle:redirect "/")))))

(simple-error (err)

(setf (lack.response:response-status ningle:*response*) 403)

(djula:render-template* "error.html" nil :title "Error" :error err)))))

(defun post-comment (params)

(let ((user (gethash :user ningle:*session*))

(form (cl-forms:find-form 'comment)))

(handler-case

(progn

(cl-forms:handle-request form) ; Can throw an error if CSRF fails

(multiple-value-bind (valid errors)

(cl-forms:validate-form form)

(when errors

(format t "Errors: ~A~%" errors))

(when valid

(cl-forms:with-form-field-values (content parent) form

(mito:create-dao 'ningle-tutorial-project/models:post :content content :user user :parent (parse-integer parent))

(ingle:redirect "/")))))

(simple-error (err)

(setf (lack.response:response-status ningle:*response*) 403)

(djula:render-template* "error.html" nil :title "Error" :error err)))))

(defun logged-in-profile (params)

(let ((user (gethash :user ningle:*session*)))

(djula:render-template* "main/profile.html" nil :title "Profile" :user user)))

(defun unauthorized-profile (params)

(setf (lack.response:response-status ningle:*response*) 403)

(djula:render-template* "error.html" nil :title "Error" :error "Unauthorized"))

(defun people (params)

(let ((users (mito:retrieve-dao 'ningle-auth/models:user)))

(djula:render-template* "main/people.html" nil :title "People" :users users :user (cu-sith:logged-in-p))))

(defun person (params)

(let* ((username-or-email (ingle:get-param :person params))

(person (first (mito:select-dao

'ningle-auth/models:user

(where (:or (:= :username username-or-email)

(:= :email username-or-email)))))))

(djula:render-template* "main/person.html" nil :title "Person" :person person :user (cu-sith:logged-in-p))))

index.html

{% extends "base.html" %}

{% block content %}

<div class="container">

<!-- Post form -->

<div class="row mb-4">

<div class="col">

{% if form %}

{% form form %}

{% endif %}

</div>

</div>

<!-- Posts Section -->

{% include "partials/pagination.html" with url="/" title="Posts" %}

<div class="row">

<div class="col-12">

{% for post in posts %}

<div class="card post mb-3" data-href="/post/{{ post.id }}">

<div class="card-body">

<h5 class="card-title mb-2">{{ post.content }}</h5>

<p class="card-subtitle text-muted mb-0">@{{ post.username }}</p>

</div>

<div class="card-footer d-flex justify-content-between align-items-center">

<button type="button"

class="btn btn-sm btn-outline-primary like-button"

data-post-id="{{ post.id }}"

data-logged-in="{% if user.username != "" %}true{% else %}false{% endif %}"

data-liked="{% if post.liked-by-user == 1 %}1{% else %}0{% endif %}"

aria-label="Like post {{ post.id }}">

{% if post.liked-by-user == 1 %}

<i class="bi bi-hand-thumbs-up-fill text-primary" aria-hidden="true"></i>

{% else %}

<i class="bi bi-hand-thumbs-up text-muted" aria-hidden="true"></i>

{% endif %}

<span class="ms-1 like-count">{{ post.like-count }}</span>

</button>

<small class="text-muted">Posted on: {{ post.created-at }}</small>

</div>

</div>

{% endfor %}

{% if not posts %}

<div class="text-center">

<p class="text-muted">No posts to display.</p>

</div>

{% endif %}

</div>

</div>

{% include "partials/pagination.html" with url="/" title="Posts" %}

</div>

{% endblock %}

{% block js %}

document.querySelectorAll(".like-button").forEach(btn => {

btn.addEventListener("click", function (e) {

e.stopPropagation();

e.preventDefault();

// Check login

if (btn.dataset.loggedIn !== "true") {

alert("You must be logged in to like posts.");

return;

}

const postId = btn.dataset.postId;

const countSpan = btn.querySelector(".like-count");

const icon = btn.querySelector("i");

const liked = Number(btn.dataset.liked) === 1;

const previous = parseInt(countSpan.textContent, 10) || 0;

const url = `/post/${postId}/likes`;

// Optimistic UI toggle

countSpan.textContent = liked ? previous - 1 : previous + 1;

btn.dataset.liked = liked ? "false" : "true";

// Toggle icon classes optimistically

if (liked) {

// Currently liked, so unlike it

icon.className = "bi bi-hand-thumbs-up text-muted";

} else {

// Currently not liked, so like it

icon.className = "bi bi-hand-thumbs-up-fill text-primary";

}

const csrfTokenMeta = document.querySelector('meta[name="csrf-token"]');

const headers = { "Content-Type": "application/json" };

if (csrfTokenMeta) headers["X-CSRF-Token"] = csrfTokenMeta.getAttribute("content");

fetch(url, {

method: "POST",

headers: headers,

body: JSON.stringify({ toggle: true })

})

.then(resp => {

if (!resp.ok) {

// Revert optimistic changes on error

countSpan.textContent = previous;

btn.dataset.liked = liked ? 1 : 0;

if (liked) {

icon.className = "bi bi-hand-thumbs-up-fill text-primary";

} else {

icon.className = "bi bi-hand-thumbs-up text-muted";

}

throw new Error("Network response was not ok");

}

return resp.json();

})

.then(data => {

if (data && typeof data.likes !== "undefined") {

countSpan.textContent = data.likes;

btn.dataset.liked = data.liked ? "true" : "false";

// Update icon based on server response

if (data.liked) {

icon.className = "bi bi-hand-thumbs-up-fill text-primary";

} else {

icon.className = "bi bi-hand-thumbs-up text-muted";

}

}

})

.catch(err => {

console.error("Like failed:", err);

// Revert optimistic changes on error

countSpan.textContent = previous;

btn.dataset.liked = liked ? 1 : 0;

if (liked) {

icon.className = "bi bi-hand-thumbs-up-fill text-primary";

} else {

icon.className = "bi bi-hand-thumbs-up text-muted";

}

});

});

});

document.querySelectorAll(".card.post").forEach(card => {

card.addEventListener("click", function () {

const href = card.dataset.href;

if (href) {

window.location.href = href;

}

});

});

{% endblock %}

pagination.html

{% if page-count > 1 %}

<div class="table-pagination">

<div class="pagination-summary">

Showing {{ start-index }}-{{ end-index }} of {{ count }}

</div>

<nav aria-label="{{ title }} pagination">

<ul class="pagination">

<li class="page-item{% if not prev-page %} disabled{% endif %}">

{% if prev-page %}

<a class="page-link" href="{{ url }}?page={{ prev-page }}&limit={{ limit }}">Prev</a>

{% else %}

<span class="page-link">Prev</span>

{% endif %}

</li>

<li class="page-item{% if page == 1 %} active{% endif %}">

{% if page == 1 %}

<span class="page-link">1</span>

{% else %}

<a class="page-link" href="{{ url }}?page=1&limit={{ limit }}">1</a>

{% endif %}

</li>

{% if show-start-gap %}

<li class="page-item disabled"><span class="page-link">...</span></li>

{% endif %}

{% for p in pages %}

{% if p != 1 and p != page-count %}

<li class="page-item{% if p == page %} active{% endif %}">

{% if p == page %}

<span class="page-link">{{ p }}</span>

{% else %}

<a class="page-link" href="{{ url }}?page={{ p }}&limit={{ limit }}">{{ p }}</a>

{% endif %}

</li>

{% endif %}

{% endfor %}

{% if show-end-gap %}

<li class="page-item disabled"><span class="page-link">...</span></li>

{% endif %}

<li class="page-item{% if page == page-count %} active{% endif %}">

{% if page == page-count %}

<span class="page-link">{{ page-count }}</span>

{% else %}

<a class="page-link" href="{{ url }}?page={{ page-count }}&limit={{ limit }}">{{ page-count }}</a>

{% endif %}

</li>

<li class="page-item{% if not next-page %} disabled{% endif %}">

{% if next-page %}

<a class="page-link" href="{{ url }}?page={{ next-page }}&limit={{ limit }}">Next</a>

{% else %}

<span class="page-link">Next</span>

{% endif %}

</li>

</ul>

</nav>

</div>

{% endif %}

Conclusion

Phew, that was a long one, and honestly it kinda got into the weeds a bit, thank you for persisting with it and following it to the end. It took quite a while to study and get right. As you no doubt felt while writing it, there was a LOT of calculations and data being passed into the template, and it would be awful to have to repeat that everywhere you wanted to perform pagination, but don't worry in part 2, this is what we want to try and solve. A more generalised pagination system that doesn't require quite so much logic in the controllers.

If you found this lesson helpful, consider experimenting with different page sizes or adding pagination to the comments on individual posts. The patterns we've established here are reusable throughout your application.

If you found bugs or issues, please do let me know, I correct things when told and I try to fix things as quickly as possible.

Learning Outcomes

| Level | Learning Outcome |

|---|---|

| Understand | Understand how SQL LIMIT and OFFSET work together to enable pagination, and how query parameters like ?page=2&limit=50 map to database queries through SXQL's (sxql:limit n) and (sxql:offset n) forms. |

| Apply | Apply CLOS method combination (:around methods with call-next-method) to implement parameter validation and error recovery, ensuring offset never exceeds total count and calculating corrected page numbers when needed. |

| Analyse | Analyse the mathematical relationships in pagination (page-to-offset conversion, range calculations, gap detection) and trace how values flow through the :around method, primary methods, controller calculations, and template rendering. |

| Create | Create a complete pagination system by combining :around methods, SQL queries with LIMIT/OFFSET, controller calculations (page/offset conversions, range calculations), and reusable template partials that handle edge cases like invalid page numbers and single-page results. |

Github

- The link for the custom pagination part of the tutorials code is available here.

Common Lisp HyperSpec

| Symbol | Type | Why it appears in this lesson | CLHS |

|---|---|---|---|

defpackage |

Macro | Define project packages like ningle-tutorial-project/models, /forms, /controllers. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defpac.htm |

in-package |

Macro | Enter each package before defining models, controllers, and functions. | http://www.lispworks.com/documentation/HyperSpec/Body/m_in_pkg.htm |

defgeneric |

Macro | Define the generic posts function signature with keyword parameters offset, limit, and count. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defgen.htm |

defmethod |

Macro | Implement specialized posts methods for user and null types, and the :around method for validation. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_defmet.htm |

call-next-method |

Function | Invoke the next most specific method from within the :around method after validating parameters. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_call_n.htm |

let |

Special Operator | Bind local variables in the :around method (count, offset, limit) and controller (user, page, limit). |

http://www.lispworks.com/documentation/HyperSpec/Body/s_let_l.htm |

let* |

Special Operator | Sequentially bind pagination calculations (page, page-count, prev-page, etc.) where each depends on previous values. |

http://www.lispworks.com/documentation/HyperSpec/Body/s_let_l.htm |

if |

Special Operator | Check conditions like whether offset exceeds count, or whether count is greater than zero. | http://www.lispworks.com/documentation/HyperSpec/Body/s_if.htm |

when |

Macro | Calculate prev-page and next-page only when the condition is true, returning nil otherwise. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_when_.htm |

or |

Macro | Provide fallback values when parsing page and limit parameters, defaulting to 1 and 50 respectively. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_or.htm |

and |

Macro | Check multiple conditions in the :around method (count > 0 AND offset >= count) before recalculating. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_and.htm |

multiple-value-bind |

Macro | Capture the three return values from posts (posts, count, offset) and from sxql:yield (sql, params). |

http://www.lispworks.com/documentation/HyperSpec/Body/m_multip.htm |

values |

Function | Return multiple values from posts methods (results, count, offset) to the caller. |

http://www.lispworks.com/documentation/HyperSpec/Body/a_values.htm |

loop |

Macro | Generate the list of page numbers from range-start to range-end for template rendering. |

http://www.lispworks.com/documentation/HyperSpec/Body/m_loop.htm |

parse-integer |

Function | Convert string query parameters ("1", "50") to integers, with :junk-allowed t for safe parsing. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_parse_.htm |

floor |

Function | Round down the result of offset / limit to calculate the current page number. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_floorc.htm |

ceiling |

Function | Round up the result of count / limit to calculate the total number of pages. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_floorc.htm |

max |

Function | Ensure offset and limit never go below their minimum valid values (0 and 1), and calculate range-start. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_max_m.htm |

min |

Function | Ensure range-end doesn't exceed page-count and calculate end-index correctly. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_max_m.htm |

1+ / 1- |

Function | Increment/decrement page numbers for navigation (next/previous page, page number conversions). | [http://www.lispworks.com/documentation/HyperSpec/Body/f_1pl_1.htm](http://www.lispworks.com/documentation/HyperSpec/Body/f_1pl_1.htm) |

length |

Function | Get the count of posts returned to calculate end-index accurately. |

http://www.lispworks.com/documentation/HyperSpec/Body/f_length.htm |

Joe Marshall — Advent of Code 2025, brief recap

@2026-01-29 19:22 · 36 days agoI did the Advent of Code this year using Common Lisp. Last year I attempted to use the series library as the primary iteration mechanism to see how it went. This year, I just wrote straightforward Common Lisp. It would be super boring to walk through the solutions in detail, so I've decided to just give some highlights here.

Day 2: Repeating Strings

Day 2 is easily dealt with using the Common Lisp sequence manipulation functions giving special consideration to the index arguments. Part 1 is a simple comparison of two halves of a string. We compare the string to itself, but with different start and end points:

(defun double-string? (s)

(let ((l (length s)))

(multiple-value-bind (mid rem) (floor l 2)

(and (zerop rem)

(string= s s

:start1 0 :end1 mid

:start2 mid :end2 l)))))

Part 2 asks us to find strings which are made up of some substring repeated multiple times.

(defun repeating-string? (s)

(search s (concatenate 'string s s)

:start2 1

:end2 (- (* (length s) 2) 1)

:test #'string=))

Day 3: Choosing digits

Day 3 has us maximizing a number by choosing a set of digits where we cannot change the relative position of the digits. A greed algorithm works well here. Assume we have already chosen some digits and are now looking to choose the next digit. We accumulate the digit on the right. Now if we have too many digits, we discard one. We choose to discard whatever digit gives us the maximum resulting value.

(defun omit-one-digit (n)

(map 'list #'digit-list->number (removals (number->digit-list n))))

> (omit-one-digit 314159)

(14159 34159 31159 31459 31419 31415)

(defun best-n (i digit-count)

(fold-left (lambda (answer digit)

(let ((next (+ (* answer 10) digit)))

(if (> next (expt 10 digit-count))

(fold-left #'max most-negative-fixnum (omit-one-digit next))

next)))

0

(number->digit-list i)))

(defun part-1 ()

(collect-sum

(map-fn 'integer (lambda (i) (best-n i 2))

(scan-file (input-pathname) #'read))))

(defun part-2 ()

(collect-sum

(map-fn 'integer (lambda (i) (best-n i 12))

(scan-file (input-pathname) #'read))))

Day 6: Columns of digits

Day 6 has us manipulating columns of digits. If you have a list of columns, you can transpose it to a list of rows using this one liner:

(defun transpose (matrix) (apply #'map 'list #'list matrix))

Days 8 and 10: Memoizing

Day 8 has us counting paths through a beam splitter apparatus while Day 10 has us counting paths through a directed graph. Both problems are easily solved using a depth-first recursion, but the number of solutions grows exponentially and soon takes too long for the machine to return an answer. If you memoize the function, however, it completes in no time at all.

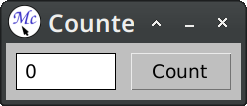

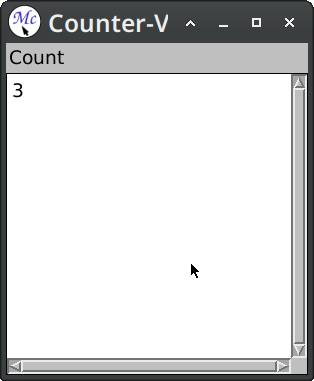

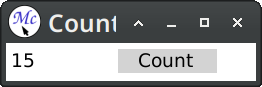

TurtleWare — McCLIM and 7GUIs - Part 1: The Counter

@2026-01-26 00:00 · 40 days agoTable of Contents

For the last two months I've been polishing the upcoming release of McCLIM. The most notable change is the rewriting of the input editing and accepting-values abstractions. As it happens, I got tired of it, so as a breather I've decided to tackle something I had in mind for some time to improve the McCLIM manual – namely the 7GUIs: A GUI Programming Benchmark.

This challenge presents seven distinct tasks commonly found in graphical interface requirements. In this post I'll address the first challenge - The Counter. It is a fairly easy task, a warm-up of sorts. The description states: